Welcome to Streamr’s core dev team update for January 2020. A central focus for the past few months has been on building out our Data Unions (DU) developer framework. You might have known it as Community Products in the past but we’re renaming it now to unify the terminology before a wider launch.

The DU framework is the tech stack that Swash is built on top of, in addition to the Streamr Network. There were multiple complementary components that also needed to be adjusted in order to accommodate DU such as introducing a new type of product flow creation on the Marketplace and adding some features to our p2p Network. Continuing to bring all of that together over the next four months or so is the task ahead of us. Henri recently gave an internal breakdown of where we are. Here is an edited version of that overview.

Table of Contents

Bringing Streamr’s DU framework Together

The backend of the DU framework has been running stably since Christmas. Recent backend work has mainly focused on getting proper automatic tests in place to verify functionality and prevent problems caused by changes in the future.

The remaining major things to do are:

- Finish the frontend work. This is at an advanced stage already.

- Work on state replication to achieve scalability. Currently, there’s only one DU server instance running. This single instance answers all queries about the current state of the community. These queries are sent by users of apps such as Swash. More users means more queries meaning that beyond some number of simultaneous active users, the single instance becomes overwhelmed. To solve this, multiple DU server instances (sometimes also referred to as validators) can be run, and queries load balanced among them. For this to work, they all need to successfully compute the same state as the other nodes. This is partially developed but untested. It’s the biggest missing piece of the puzzle at the moment.

- Smart Contract Audit. This will be kicking off in the next few weeks. Post-audit, the smart contracts used by private beta participants need to be redeployed. We are working to finalize on the best migration option to make the transition as smooth as possible for existing beta testers.

When will this be delivered? By the end of Q1 we’ll ideally be in a state where the above tasks are complete and have everything in place to support more DU builders to join the private beta and battle-test the tools. After that, existing DUs should grow as much as they can again, allowing us to verify that the system behaves as expected under heavier load.

There could well be surprises along the way. (What innovation is without this). Specifically, some delays might come from implementing the state replication and availability and finding the needed smart contract auditor. But we still have some time left before end of Q1, so it should give us some buffer. As per usual, those timelines are meant more for general guidance and should not be taken as guarantees.

After that?

During Q2, pilot community projects should focus on growing their user base. Our team will resolve any technical issues as they are encountered whilst scaling up. If there are no major surprises, we expect to go to public beta around this period (=launch it). An important point to keep in mind is that unless we reach significant scale in terms active concurrent users, we can’t really declare the system is ready for production use yet. Tests can only offer insights to a certain extent.

The Uniswap-powered Marketplace purchase flow is almost ready and going through some final testing. Going forward, Marketplace buyers can use ETH, DAI, or DATA to pay for a product! The incoming assets will be automatically swapped to DATA using Uniswap and sent to the seller as usual. This will allow us to expand the potential base of data buyers who might be hindered by the initial requirement of DATA token. While we will continue to improve our Marketplace and roll-out new features to increase adoption, there is nothing stopping a developer or team from deploying their own variation of data marketplace on top of Streamr Network. The code is open source, so if you are interested in this field or business segment, feel free to reach out to us on our Dev Forum and we will be happy to share our technical knowledge and assist whenever possible.

Network workshops

We hosted an internal two-day Network workshop, which took place in Helsinki in mid-January. The goal of the workshop was to establish main directions for network development for 2020. Topics discussed included:

- Steps needed to allow untrusted parties to run nodes

- Improving scalability by having clients join the network as “light nodes”

- Enforcing data signing and implementing encryption in client libraries

- Rate limiting as potential spam defense or to potentially offer fremium model

- Guarding against eclipse attacks / Sybil attacks

Other major topics discussed:

- Replacing websocket connections with WebRTC to allow peers behind NAT to connect to each other

- Tracker-based vs trackerless peer discovery and topology construction

- Getting started with token mechanics with help from BlockScience team

- Opening up the storage node market, making it possible for stream owners to choose where data gets stored

- Stream namespaces

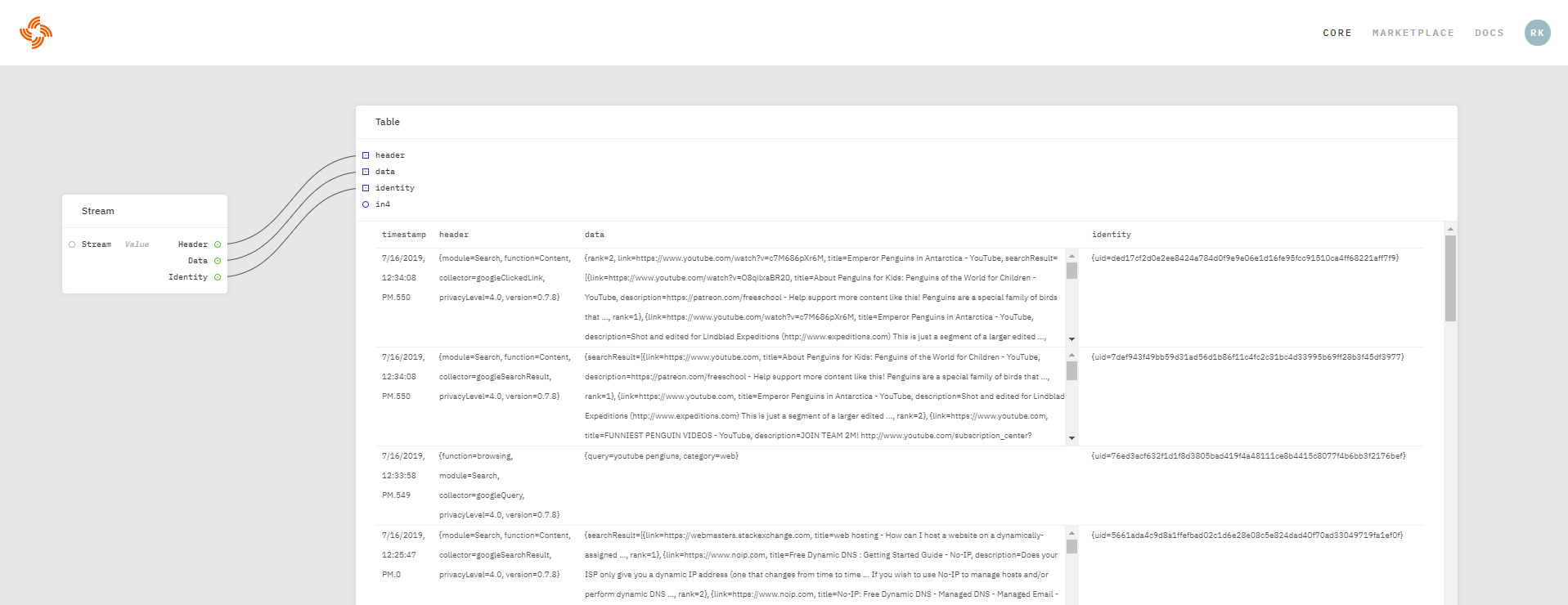

Below is the more detailed breakdown of the month’s developer tasks. If you’re a dev interested in the Streamr stack or have some integration ideas, you can join our community-run dev forum here.

As always, thanks for reading.

Network

- Setting up AWS machine for network emulation

- Fixed bug in network & broker re storing encrypted messages

- PR regarding end-to-end encryption key exchange is merged

- Fixing some problems with Cassandra storage

Community Products

- Making test data for CP frontend development and testing

- Pre-filling ETH addresses on product create

- Fixing bugs related to community member list

- Updated monoplasma dependencies so it builds on Node 12

- Fixing some issue on Streamr Javascript client for CP tests

- Auditing the latest version of CP smart contract for security purpose

- Work on state replication across servers to achieve scalability

Core app (Engine, Editor, Marketplace, Website)

- Working on the new use-case pages

- Working on shared secrets and connecting Ethereum identity from the product editor

- Good progress on Uniswap integration with Marketplace payment options