Earlier this month, Henri was interviewed by Anna Rose and Fredrik Harryson from Zero Knowledge. The interview was so good, rich and full of detail, that we decided to transcribe it in full. (Thanks Mikhael Santos for starting this process). And a warning: there might be more than a few typos!

Of course there’s nothing like the real thing. And you can find that here.

Host — “Welcome to ZeroKnowledge, a podcast where we explore the latest in blockchain technology and the decentralized web. The show is hosted by me, Anna and me, Frederick. In this episode, we’ll sit down with Henri from Streamr to talk about P2P Networks, pub/sub protocols, data marketplaces and more.

Host — So today we’re sitting with Henri Pihkala. Hi, Henri.

Henri — Hi, hi hieee! Thanks for having me.

Host — Maybe to kick off, it would be really cool to hear a little bit about you? Where are you from? What are you doing?

Henri — Okay, so my name is Henri, I’m the CEO of Streamr. I have a technical background, I’m a Finnish guy. I somehow ended up CEO after various turns of luck or unluck. But anyway, here we are, um, deeply interested in the decentralized space and blockchain of course and now, trying to see that the Streamr project reaches its goals, which I’m sure we can talk about in this episode as well.

Host — Very cool. So can you just, like, give me the one line description? What Streamr is? I think that’s good just before we dig into, like, the deeper topics here. What do you work on?

Henri — Yeah, so we’re working on the decentralized real time data protocol for Web3 basically. So creating a decentralized pub/sub network and some layers on top off that, like an incentivization layer to allow nodes to monetize their idle bandwidth and storage as well as then actual value in the data. We’re talking about, you know, data sharing data, monetization data, data marketplaces, stuff like that.

Host — When did this company start?

Henri — Well, it’s a long history. I mean, we’ve done this for a long time in various forms. It’s gone, like from algorithmic trading, developing trading bots to more general, like, cloud based real time data infrastructure and from there into the decentralized space. So I’ve been working with real time data, like for more than ten years and also with the same people for a long time. So we’ve taken different paths to arrive here. But I think this is the step that feels, most passion about because it truly has the best potential to kind of change the world and how applications are built and how data is used and owned and monetized and shared and all of that. So we feel that this is disruptive, and we can be a part of building something completely new in the world.

Host — You mentioned earlier, decentralized pub/sub messaging. And I guess messaging in general is a like core part of what you work on. For like the developers in the audience, they might have heard pub/sub before in different contexts. Like even a Parity node has pub/subon you know, you can subscribe to events on the blockchain and the Parity node will publish it. But decentralized pub/sub is something a little bit different. Maybe you can give a high level view what that is?

Henri — Yeah, definitely. And maybe first define define what we mean exactly by pub/sub. So of course publish / subscribe, right. So it’s, a messaging pattern that consists of publishers who publish messages on topics. So topics, topics are like collections of events. And then there are subscribers who these messages are delivered to. And this is a highly, highly, highly useful primitive because it decouples the data producers from the data consumers. So from the data producer side it’s mostly like fire and forget kind of stuff that you do; the producers don’t need to know who the recipients of that message will be. They just post it out there onto the system, and the subscribers will receive them in real time.

And you can, of course, have things like storage and message persistence happening there and so on. And this is being used very, very widely in many different kind of applications ranging from, you know, financial data distribution to instant messaging applications. All kinds of like gossip players and stuff happening inside other networks and so on. And of course, real time applications, devices reacting to each other’s data and all this.

So typically and so far, this has been done using some kind of centralized message hubs, maybe consisting of just one server or a cluster of servers. But anyway, this is something that’s available in typical cloud services on you know, Azure, Amazon, you know, you name it, they they have it.

Now, as we see that kind of decentralized service layers are starting to to form, we decided that this is something that we want to take to the decentralized space because it removes removes the trust from there, it improves the kind of efficiency of the overall network and removes also the vendor lock in.

If you build a system that relies on the cloud services or a particular system to be there used as the back end or the framework, then you’re kind of married with that system for a long time. And of course, the commercial players have their commercial interests in in offering these services. So by building a decentralized system, we can probably achieve, like better cost effectiveness and all the, like benefits of trustlessness and so on. And of course, maybe also offer the kind of incentive layer that allows you to make something useful out off your idle storage, or idle bandwidth by joining into the Network and helping others run it.

Host — You just mentioned how the publisher is sort of from what I understood, the publishers putting it out. Is this similar to like that concept of broadcasting? Is that the word you would use?

Henri — Yeah, yeah. Broadcasting is, like a one-to-many pattern basically. So with a pub/sub system, you can have all the different variations. You could have, like, one-to-one. Basically you could have a topic with one producer and one consumer and would be like a, you know, a private channel equivalent in an instant messaging system. Then broadcasting would be something where there’s one publisher and a number of subscribers, and maybe the analog would maybe be like a read only Telegram channel right where only one person could post but there could be many reading. And then you can have of course many-to-many pattern as well. Maybe analogous to the group chat but this is like a group chat for machines in a sense, to have this machine data oriented pub/sub going on.

Host — And maybe that’s worth just making that distinction. Because I think when you hear the word messaging, you often have this idea of, like, text like some sort of human language messaging. But as I imagine, we’re not talking about that here.

Henri — No, not at all. So well, well, why not of course someone could implement, like, a decentralized instant messaging for humans as well. And there are some. For example, there’s the Status guys who are using the Whisper protocol for their messaging feature there. But mainly what happens in our Network is that we’re looking into use cases in the space of, of IoT, Smart Cities. Also, some financial data may be due to our or background in finance and so on. So it’s mostly machine data generated by sensors, applications, or kind of metrics and telemetrics, you know, connected cars or while phones, things like this so usually not really human interaction, but it could be we’re not in any way, like opinionated about what, what can be on the network, you can use it for any kind of applications.

Host — I wanna just quickly dig in a little bit into, so to get an idea of what you’ve already built and like, how this already works. When I think about Pub/Sub by, I think very much of just like the network layer like you, can you pub/sub up over your UDP or over, you know, TCP and like, I’ve used a lot of like, ZeroMQ in my days and in the past, and they have this very nicely implemented, but for a centralized system. So what do you have and like, how do you actually broadcast stuff on the Network level?

Henri — Yeah, so we’re working kind of incrementally towards decentralization. So we started off with a fully centralized system, pretty similar to what you’ve used before with ZeroMQ. So we were using frameworks like Kafka, Cassandra and Redis. Traditional ways of building this cloud service for that. And implemented storage of messages and some playback functionality. Stuff like that.

Currently we are working on the first peer-to-peer version, so we don’t yet have that up and running. It’s in the works now and should be deployed into our and production version in maybe, like, three to six months. So it’s making quite nice progress.

It still won’t have all the things required for, like a fully decentralized system in terms of like giving certain security guarantees on the Network. But it so we’ll first deploy that as a kind of version where we run all the nodes, and then we can start expanding from there. Maybe allow some of our partners to run nodes and eventually allow anyone to run a node.

But by that time, we’re going to need to have all those things like end-to-end encryption happening there and be more prepared against ill behaving nodes and malicious activity in the Network. So that’s the hard part of the building the Network overall. And in addition to the Network layer, we of course have some stuff off on top. so we’re kind of taking a full stack approach. So we also launched in May this year, a data Marketplace. It’s built on top of the Ethereum and smart contracts and using network layer below for data delivery. So this is something where you can, like, um, post the data streams. It is like a discovery layer of sorts into the content that’s on the Network. So you can you can post the product there. It can be free, it can have a price. If it has a price, you can buy and gain access to that data. So this offers like a channel for data monetization and it’s been a super interesting experiment for us too, to try various ideas around that topic.

You got your virtual machines, you got your file storage, you’ve got your databases, your real time data pipelines. All of these will eventually be replaced by decentralized alternatives.

Another tool that we have is like this visual programming environment, which can be used to build, for example, data integrations or simple analytics, or even oracles for smart contracts. So there might be, for example, a data process that subscribes to some stream from the Network. Monitors it. And when sort certain conditions are met, or maybe periodically, they could send out the transaction to a smart contract on the Ethereum blockchain or stuff like that. So it’s quite versatile. It’s being on a back burner now, since we’ve been focusing in the spring on the Marketplace, and currently on the Network. But all these go together in a way that pretty nice for the user as you get the kind of technical in infrastructure on the Network layer, and then you get kind of applications on top. And even though all kinds of applications can be built on top of the Network layer, of course, but I think the Marketplace and the kind of, um and the processing tool are pretty staple items in this kind of ecosystem.

Host — Yeah, like there’s a very it’s a very special special type of developer that will build something directly on top of the network layer. Most people will want the intermediary to build things.

Henri — Yeah, some assistance there for sure and especially building like integration, just getting data. It’s typically being served from some existing API maybe a rest API or web socket API, or some IoT protocol like MQTT, and then you want to take that data and publish it onto the network and that’s something that can be made quite easy with the tooling that we have. But honestly, we’re not quite there yet in terms of user experience, but it’s something that will kind of be working on further down the road.

Host — But what you describe on the Network player it sounds, I mean, it sounds like you’re basically trying to build out the idea that everyone runs a node of some sort and you have you know, you’re traditional, you know, DHT discovery to find peers on the network and find people who are, you know, listening to the things that you’re broadcasting and then you’re off. But this sounds pretty similar to something like Whisper?

Henri — Yeah, it has the same same principle, but very different goals. So Whisper is much more privacy oriented. It’s been, designed from a perspective of anonymity and location obfuscation. And that also leads to certain choices being made in the network topology. For example, messages in Whisper typically go over many, many hops to hide the origin of the message. Whereas for our use cases were more focused on things like trying to minimize the latency and maximize the throughput of the Network. So the idea is similar. But the kind of use cases that you have in mind affect both the structure of the network as well as the parameter-isation of the network. And these things lead to very different and outcomes in the end.

So we first had this dream of maybe we could use Whisper for our use case. But when you dig deeper and deeper, you discovered, “okay, so the requirements are so different that we do need to build something of our own really for for our use case”. I think, like any existing particle out there could of course, we kind of extended, but then you hit the kind of fundamental design principles off each protocol and noticed that, yeah, we actually need to take take a different approach.

Host — I kind of wanted to understand a little bit more where this fits into sort of the larger Web3 tech stack and what you would be working with. So do you, because as you just described it, it almost sounds like a standalone thing, but I imagine that it has to interact with a lot of other things. So where does it live?

Henri — Yeah, so basically it is a stand alone thing, but it has certain bindings too to other stuff that’s that’s out there. So for example, I mentioned earlier the incentive layer that we’re looking to build on top of this like based protocol layer. And that, of course, is something that we, it’s not an island, right? So it it has interactions, and currently we’re working in the Ethereum ecosystem that hosts our ERC20 token on so on, and we’re also planning to use certain, like cryptographic choices made in the Ethereum ecosystem.

For example, we could use Ethereum identities on the Streamr Network. That would be very straightforward as like representing some data producer on the Streamr network. And later, when we will build this incentive layer it all kind of auto-magically works together in that sense.

So that’s something we’re pretty excited about in the longer term vision, we see that the Streamr network could even arc over multiple different blockchain ecosystems. But, yeah, I don’t know how feasible that is at the moment and it’s certainly something that we’ll be looking into much, much further down the road. So, let’s see.

In terms of technology, I mean, we use LibP2P which is also a building block in, for example, IPFS and related technologies. So that’s something we’ve taken as a kind of starting point to aid us. You can get some like useful transports from there. Some Kademlia DHT. They even have a pub/sub implementation currently it’s very, very early for that. They have a naive message delivery algorithm there that just floods the network with the messages so it’s not really scalable. Those guys are also working on improvements to that protocol, working on something called gossip sub. It looks pretty interesting. I mean, we might contribute to that development, or start using that, or build something of our own. It’s something that we’re kind of looking for direction at the moment, because the guarantees given and needed by each system is so different.

For us, for example, it’s important to have things like guarantees of delivery and guarantees of message order. So that if someone produces like messages 1, 2, 3, 4, 5 we want the recipient to receive messages 1, 2, 3, 4, 5 and not, for example, 4, 2, 3, and 1 and 5 not at all! This is something that could maybe be built on top of the protocol that gives fewer guarantees about things. So this is something that we’re actively experimenting with and researching at the moment.

Host — would you, so in your early documentation around the project, there’s a lot of talk of it being an off chain solution. Is that still the way you think about it?

Henri — Yeah, yeah, definitely. So this is like a data layer that is a companion network for a blockchain. So blockchains are expensive and non-scalable today and typical it makes no sense to put data onto a blockchain. It’s also slow. I mean it’s not good for data delivery, even if every transaction is indeed broadcasted to the Network. But still like it makes no sense. So for us, we realized that yeah, we could bring something to the table by creating this real time data layer on top of the blockchain and related to the blockchain. But it’s not a blockchain. So we’re not building another blockchain! There’s quite a few of those already.

So what we want to contribute instead is to build this data network. And it fits into the larger picture of, you know, Web3 and all the kind of infrastructure services getting decentralized over time. I think like today, when people talk about dApps, they basically mean a frontend plus a smart contract backend in there.

But I think it will be much broader in scope than that. I mean, all the cloud services we used today, you got your virtual machines, you got your file storage, you’ve got your databases, your real time data pipelines. All of these will eventually be replaced by decentralized alternatives. And this is already happening, like with IPFS and File Coin in the file storage. With Golem in the kind of computing power and virtual machine on the containerization of the applications with yeah, I don’t know, Big chain DB on the database side and with Streamr on the real time data messaging and real time data pipeline sector. So these will all work together to enable much more flexible dApps in the future.

Host — I would almost, like you said before, you might be able to use more blockchain side rather than saying that Streamr lives on top of a blockchain I would almost say that it lives below a blockchain … And then, like maybe you pay for data with Bitcoin and use Ethereum as the identity solution, you actually use both blockchains at the same time.

Henri — Yeah, exactly. Maybe maybe it’s, not like two dimensional even. The Ethereum guys said that for Ethereum 2.0 they’re going to need like a pub /sub system for for the gossip protocol related to the sharding algorithm that that they have, so would the pubs/sub be below the blockchain or inside the blockchain or above the blockchain or on the side of the blockchain. Yeah, I don’t know.

Host — off the blockchain?!

Henri — It doesn’t even matter where where exactly it is! It’s connected to the blockchain for sure. That’s something that we know.

Host — I like the idea of basically, you’re building a system that, you know has some property that you want. Like being able to quickly send data or, like high throughput/ low latency Network, and then you use the features of a blockchain that are unique. So I mean, you’re in essence using blockchain like you’re saying, “today a dApp a javascript page on top of smart contract,” I think it’s pretty clear that in the future a dApp will encompass a lot more. The question is just like, what will developer tools look like? What will it look like for a user? A user obviously doesn’t want to run run like twenty different p2p nodes on their computers.

Henri — Yeah, exactly.

Host — which is like Whisper’s initial goal was also to like, “it’s not a blockchain thing”. It’s only using, like the Ethereum blockchain, like it’s using the nodes as a discovery method. So instead of like running another node to do messaging, you just build it on top of the existing Ethereum network. And as far as i know, Whisper’s moving away a little bit from that. Now we’ll see.

Henri — Yeah, yeah, I think it was a nice idea in the beginning, but each of these systems, like, if you think about the original Ethereum protocol stack involving these different parts, it’s quite ambitious. And maybe each of those actually has their own, requirements and don’t find themselves comfortable living in the same network anymore. So time changes things.

It’s not like you’re, you know, burning energy to mine bitcoin. It’s more like you’re contributing useful things to the Network, which is what every decentralized network really should be; that making use of resources that would otherwise be wasted and allowing people to monetize on that idle capacity.

Host — I sort of want to go back to something you were saying before about messaging. You had mentioned the the benefits of decentralizing this. You talked about efficiency or the fact that right now, if you don’t, you’re married to like an enterprise system. But can you explain why it would actually be more efficient ?

Henri — Efficiency can be measured, of course, in many kind of ways, and the cloud providers do take good care of providing an efficient system. But especially if you think about cost efficiency like, “what does it cost to run this kind of system?” the cloud providers and commercial players they’re of course looking to make their profit margins and so on. So you’re not only paying for the actual technical service, you’re also paying for their profit and in a decentralized system that would be a little bit different in terms of the mechanics that it that it can employ.

Another reason is that with a with a global network, with nodes all over the place, you might be able to get the data from your nearest node instead of always consulting it from the, you know, the AWS data center that is in the States or wherever. So it kind of gives you this geographical spread at the same time as a kind of additional benefit of decentralization.

Host — I always wonder about that though because it doesn’t quite, I guess, is that more of an assumption that efficiency would be improved? I’m trying to understand exactly what the for sure benefits of decentralizing this are. Does it have to be for a proper decentralized Web3 system, Is it because it’s actually more efficient? Or are we actually battling inefficiency?

Henri — Well, I mean, this question is already touching on the kind of fundamental of “why decentralization”? Why do we need decentralized Apps in the first place? That’s an interesting topic in itself and touches on the stuff we talked earlier about like vendor lock-in and trust and all that stuff. But in terms of pure efficiency, how about we think about it like this? So one aspect to efficiency is also reducing the kind of wasted capacity out there. People have internet connections they’re not using. They have storage that they’re not using. Would that be a reasonable aspect to add to the kind of efficiency conversation that this is a way for you to make your idle resources more useful? That also adds to the whole picture. And it’s not like you’re, you know, burning energy to mine bitcoin. It’s more like you’re contributing useful things to the Network, which is what every decentralized network really should be; that making use of resources that would otherwise be wasted and allowing people to monetize on that idle capacity.

Host — Maybe I’m also a sort of mixing that up with the concept of speed. Like, is it actually faster?

Henri — Well, not not necessarily. I mean, in a decentralized network, especially in messaging you kind of navigate the landscape off latency versus privacy, for example, as we discussed in the Whisper use case. So you can think of it as a kind of ah one dimensional picture where on the other end there’s privacy and multiple hops, and very, very high latency, because the message travels all around before reaching its destination, kind of like an onion tactic employed in the tor network, right? And on the other hand, you have super efficient, like the data travels directly via the shortest possible route to its destination. But that also makes it less robust because there can be only one shortest path, right? By definition, right ? And if something happens to that shortest path, maybe a node goes down, maybe somebody attacks that shortest path or whatever, then your messages no longer make it to their destination.

Host — And there’s no redundancy there ?

Henri — Yeah, so you’re kind of always balancing between which properties of the network are beneficial to you and most important to you and which are not that important to you and Whisper, for example, chose that, “Yeah, the privacy is much more important than than latency so we can sacrifice latency and just focus on the privacy and in our use cases like IOT and smart. City’s financial data, stuff like that we do want to take care of the latency. We never achieve maybe better latency than, you know, some direct connection between those. But that doesn’t exist in the centralized world either. So you know, so if I’m in Switzerland and Frederick is in Sweden, right? So if I want to send a message to Frederick via a cloud service, that is on the AWS in the United States, that’s hardly the shortest path either, right? So I could peer to peer message like Frederick on the internet, and it would probably take reasonably on a fast route to Frederick. But then again, I need to know who I’m sending the message to because that’s all the TCP/IP stack offers me. Like I have to know who I’m connecting to.

If you have the pub/sub primitive, then that is removed, which makes it easier for everyone. But it does require either the central party, which could be a far way, destroying the latency. Or it can be decentralized that allows it to sometimes find a better route to Frederick than going through the States. So it depends on the network topology and how the routing in the network works.

Host — I mean, efficiency at the end of the day, it’s always sort of comes down to use case and like what you’re actually trying to do. If efficiency to you is throughput, then maybe a centralized system in is not that bad. But if it’s latency than it’s actually relatively easy to build a more efficient system. Like you’re saying, like it doesn’t make sense to go through AWS service for every message. Especially in an IoT set up. If you’re like, you have one sensor in a factory producing data, and then you have a bunch of data consumers that are like at the work stations of some other machines or some other people in that same factory, then you have a direct connection in that house.

That’s actually like a story that I came across. I was talking someone can’t remember who, or I read this? I don’t know. The story is that someone set up an IPFS node inside their office and switched all there NPM package links to IPFS links. So instead of downloading every single package off of NPM it just did that once and then it was cashed in the IPFS nodes locally and so the office internet could go down and people could still run it and install. Like you could do that before with cache server set up, you could make that work. But now it’s easy and it solves the problem pretty neatly.

Henri — Yeah, exactly. And now we’ve been just discussing, like the open global network version of Streamr. But you could imagine that there could be like private versions, maybe in a factory or a consortium of companies that want to do some real time data sharing and stuff like that, and it would be far [more] beneficial to them and far [more] like an equal playground so to say. That there’s no there’s, no leading participant that sets up and host the system. There’s also no cloud service that everyone needs to interact through. But instead, each participant in this private network can run a node and they can freely exchange data and if some of them, you know, they go down, they appear again that doesn’t really harm the Network. Everyone’s equal in power, so they have this kind of and neutral ground where their applications or, you know, smart devices or whatever can meet and exchange data so it can be kind of deployed in many different scenarios on in each of these it kind of gives slightly different benefits compared to the Cloud or the other kind of deployments.

So in the open, like global deployment, you get things like your idle resource monetization, maybe better latency. And in the private deployment you get, like, equality of participants and so on. So, yeah, it’s pretty flexible this kind of primitive and we’re we’re seeing demand for this. And we get some requests about how to deploy this to various use cases and how to use it in various use cases. Of course were quite early still, as I said, so I don’t really have, like a production version of the peer-2-peer network, but seems to us that there’s use for this kind of stuff in many, many different places, and we’re super excited about learning that.

Host — So as you just mentioned it’s quite early, but are there really world examples? You mentioned, not production ready, but, like at least conceptually have they already been, like, fully thought out?

Henri — Yeah, there’s a bunch of pilots that were we’re working on with with various parties there’s been a couple of reason blog posts about them. So so there’s, for example, this traffic model pilot going on where location data, is being kind of crowd sourced and that data is being used to build better traffic models for an area — it’s actually, the whole country of Georgia, where this traffic model is being built — to allow them to better plan things like where to build roads, where to add, like public transport lines and stuff like that.

Another interesting example is, like pollution monitoring in cities. So we are working on this pilot in London where a certain neighborhood, there’s people who host … a pollution sensor in their homes or, you know, on the roof or by their window or whatever, and this data is being contributed to a stream that’s being offered as a product also on the Streamr Marketplace. And then anyone interested in this data can go and get it from there. This could include, you know, the city officials. It could include a concerned parent who can see their kid is taking the most polluted route to their school every day, and they might want to work around that in some way. So there’s many kind of use cases, especially the concept of being able to crowdsource data.

Host — You just mentioned this Marketplace, or you had mentioned it a couple times. Are you actually like looking when you say Marketplace? Is it that people would be paying for other people’s data ?

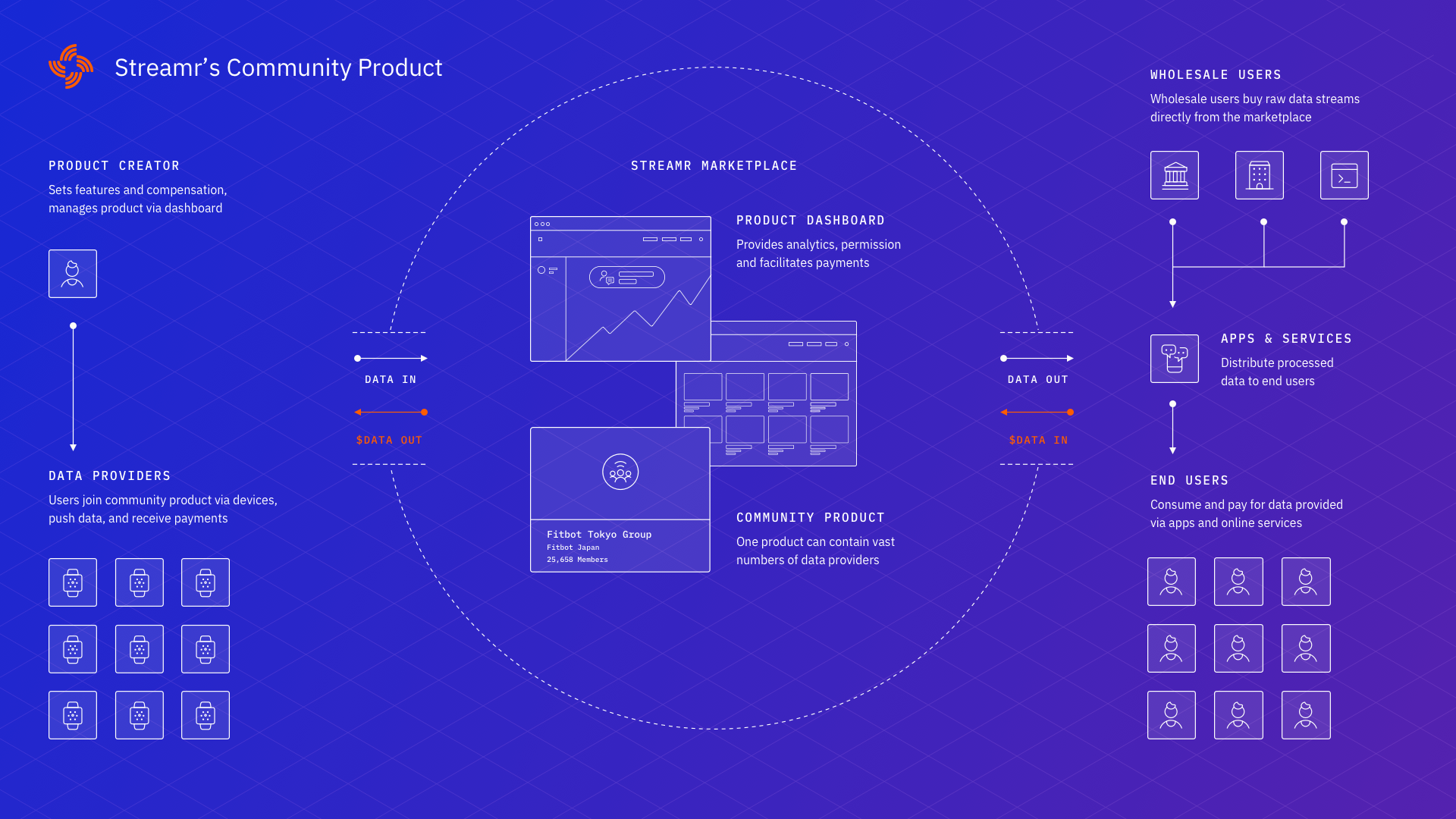

Henri — Yeah. That’s exactly what it is. So you can put data on to the Network. Then you can set a price for that data or make it free. And if someone wants it, they’re going to pay you for it. So we have kind of time based licenses. So you can buy access to a data stream or a set of data streams for, like, you know, one hour, or one week, or one month. So it’s very flexible, but today the Marketplace is still kind of a proof of concept. We wanted to kind of show where the world can be headed by combining this kind of real time data Network and blockchain so there’s not a huge amount of content at the moment on the Marketplace. We’re also actively working to to explore the possibilities. But one like crucial feature that we’re still missing and we’re currently working on that, is something we call Community Products, and that exactly means the data crowdsourcing idea where a number of data producers can contribute data to a single pool. And then, when someone buys that product, buys access to that data, then the payment gets shared between the different data producers. So if there’s fifty people contributing pollution data, someone buys it, then those fifty people get paid for it. So in this example, you can kind of earn money by hosting that sensor in your home. And that is something that has been completely infeasible like before this kind of technology. So no one has the answers in their back pocket. Like what can this be used for ? What should this be used for ? So it’s something that we’re excited to explore!

Host — To be clear, you buy access to a data stream? It’s not like, a lot of other projects, you know, like New Cypher, whatever. Talk about data marketplaces and, you know, I have this pdf that I upload, and it gets encrypted and stored and you by access to it. It’s not data that’s stored anywhere, then when you pay you get this chunk of data?

Henri — Yeah, yeah, that’s that’s. Exactly. So we’re very real time data focused, so we don’t. Our Marketplace doesn’t have files or, you know, static data sets or anything like that. It’s quite purely about real time data and getting event streams out of there.

We do store the history of messages. So sometimes that has value. Sometimes it’s a different product. You might have, like two products. One of them contains the history and doesn’t. There might be like different licensing reasons for this and so on. But anyway, the primitives that we are building on the Network level mainly support this real time data use case and that’s also the mind set we have for the Marketplace.

Host — That also lets you sort of work around a little bit of the gaming issue that, you know, exists in these other things where, you know, if you have a super high value set of data, like I upload this file and it’s worth a thousand bucks, you want to be sure that what you get for that thousand bucks is actually what it says on the package. And if it’s a completely decentralized network without identities, how do you prevent this system from being gamed? But in your scenario, it’s more like, “Well, I can buy a minute access to this data stream if it’s right then i’ll keep buying it.”

Henri — Yeah, yeah, exactly like people asked. Like, do I get try a ll like a trial periods to these products or whatever? And we say, like “Why? You can buy it for, you know, half-a-hour and see what it is and then decide if you want to continue with that”, and that makes it very feasible and very approachable as well.

But of course, as you mentioned, like one problem in this kind of system is — well always when users can provide content — is of course piracy. Everyone uploads the latest Disney movie to Youtube, like every fifteen minutes or so. And then they take it down. But in a completely decentralized system with no police, no identity, it’s much easier to commit this kind of thing. So maybe you have a high value file in some other system, or a high value data stream in our Marketplace. Someone buys it and publishes the exact same thing for half the price. So how do you kind of prevent this kind of thing from happening?

Well, in one sense, it’s an unsolved problem. Like everyone, everyone will encounter this kind of stuff. It’s also a matter of trust. If you are building a business critical application, let’s say you’re driving your robot cars or trading cryptos or trading stocks or whatever, would you rather trust the original source? Or would you based your whole business on data from a pirate? You know, so it’s a fair question to ask. There can also be, even if there’s no like central authority, you can have some kind of community governance of the content itself, so there could be like a reputation mechanism. Maybe the community can vote some of them to, you know, get out of our Marketplace, “we don’t want you here!” kind of things. So this is something we’ll be considering in the longer term, but the first version of the Marketplace is just to kind of show that yeah, this is possible. You can have data licenses as smart contracts on the blockchain and interacting with those can grant you access to data on a decentralized network. So it’s all about proving kind of the pattern there. But the devil is in the detail of course, as always.

Host — we have discussed this idea of incremental decentralization. Maybe we could talk a little bit about that.

Henri — What we mean with that, is that our goal is to maintain a functioning production level system at all times while heading towards the big vision and full decentralization. So this is an approach that some projects take and some projects don’t. But we didn’t want to do the thing like, we go underground for three years and develop something and then emerge with a beautiful, decentralized solution. Instead, we wanted to ship some value on day one and communicate that, “yeah, this decentralization is where we’re headed, but you can already use the system, even though it still depends on us”.

So over time we’re going to make ourselves unnecessary in the system, but we’re not quite there yet. So I think that approach delivers the most value to the user, which is of course, the most important thing for us. Some very idealistic purists might disagree that “yeah, like it’s either decentralized or it’s nothing”. And that’s fine. In a sense, I don’t disagree with that and we’re working towards that. But it’s just easier to explore these things and avoid doing the wrong things if you can get pretty immediate user feedback and show them already like, “okay, this is working, this is how you use it,” even though how you use it might change a little bit on the way. But still, I mean it’s so useful for communicating the ideas to have something that’s working.

It’s also proving our ability to ship things, you know, we’re not just saying, you know, we’re not just having these far reaching visions and actually not shipping anything, so I think this approach works for us and our community and our users pretty well.

Host — I think a lot of vaporware sort of comes from a place where they’re super well intentioned, they have a really good idea, and they sit down and they want to build the perfect thing. But they get used to not shipping things, and they live in this mode of not shipping things for so long that eventually, like they get, they get trapped in this idea of making the perfect thing with the perfect APIs with the perfect whatever, and you just end up never shipping anything, and eventually the company dies or people gets disinterested.

Henri — And the risk of kind of flopping increases all the time. So if you’ve been kind of building a project for, you know, three or four years and then a launch is nearing, and everything is hot. You’re token is sky high because of the speculation and then you finally release this big bang release of your system and then it’s not as good as expected, then, you know, it will just be a huge disaster.

So we rather took the approach of just, you know, showing what we have, showing where we are, being very transparent, shipping things continuously all the time and acknowledging that it takes time to get to the end result. But at least we’re kind of taking this noble ship approach, which is important to us.

Host — cool. Well, I think on that point we can probably wrap, I want to say, thanks so much for coming on the podcast, Henri.

Host — thank you very much.

Henri — Thanks Anna and Frederick, I had a lot of fun doing this.

Host — nice and to our listeners. Thanks for listening.

Host — Thanks for listening!