Forecasting the future is a tricky business, but an ambitious new project from developer Jordan Miller, sponsored by the Data Fund, will leverage the Streamr Network to provide a better than chance real-time data prediction engine. Satori nodes need to communicate with each other and obtain real world data, so the Streamr Network was a perfect match.

Table of Contents

What is Satori’s Goal?

Satori is a decentralized network aimed at predicting the future. Satori nodes publish freely available data streams containing predictions of the next data point. In addition to this public good, where knowledge of the future is openly available, Satori aims at providing a facilitating market environment where predictions can be managed and traded in various ways such as through bounties, and competitions.

How does Satori work?

The technical architecture is quite simple. It involves three essential components:

1. A network of Satori nodes

2. A pub/sub data transport network (Streamr)

3. A blockchain

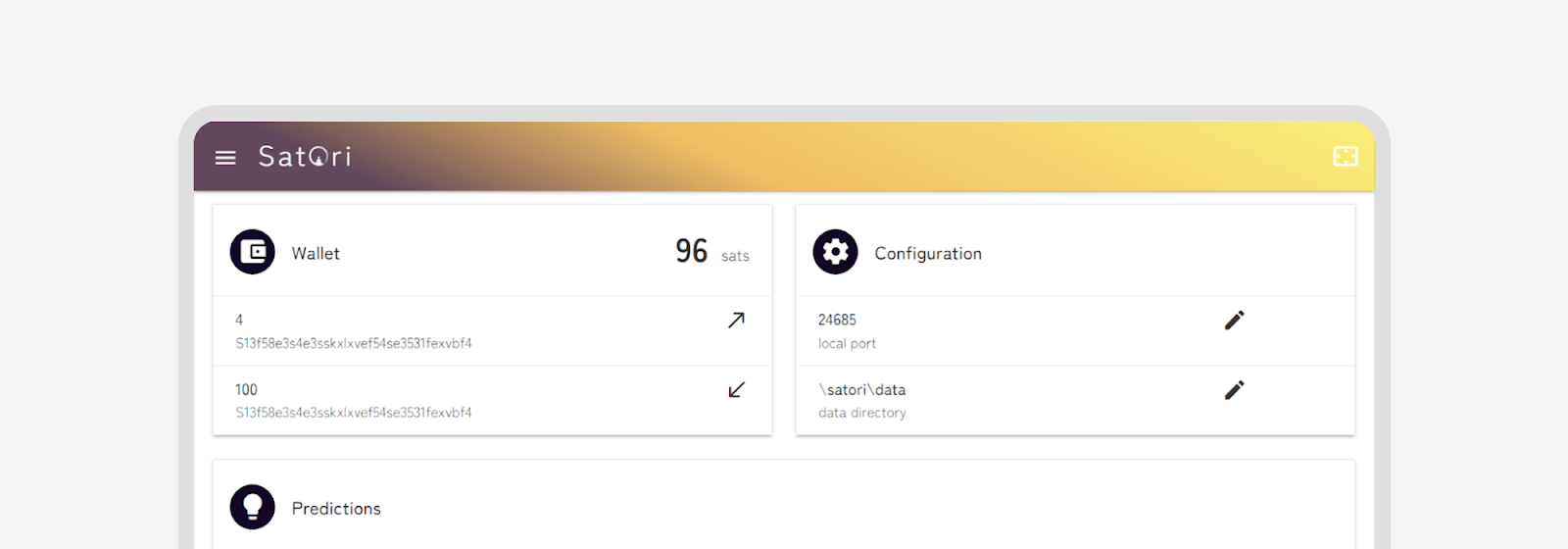

The Satori node is a downloadable piece of software that can be run on a machine of nearly any capacity. The Satori node’s main responsibility is to consume data streams from Streamr and predict those data stream’s immediate next data point. These predictions are published to new free data streams, so the future is available to all.

The Satori node has an automated machine learning engine which constantly generates new models in an attempt to find a model that best predicts the stream. Each Satori node, therefore, subscribes to one or more data streams of real world data and publishes corresponding prediction data streams.

How does Satori use the Streamr tech stack?

Satori has communication needs that a blockchain alone simply cannot provide. Satori is a high-throughput system. The main benefit of a distributed AI is that not all the data needs to be aggregated in one place, but the network as a whole has huge amounts of data flowing through it.

In theory, the Satori nodes, themselves, could form a distributed network, directly connecting to each other, and as such they would have as much throughput as they need. In practice, however, forming a truly peer-to-peer network adds a substantial amount of complexity, and no data outside the Satori network would be readily available, significantly limiting its capacity.

Furthermore, Satori needs a distributed solution because outsourcing this requirement to a centralized pub/sub provider means real world data, or predictions themselves could be censored. Satori’s aim at providing free and open access to the future is incompatible with a centralized pub/sub system. Luckily, Satori found its solution for decentralized, distributed bandwidth in Streamr.

Satori nodes constantly explore available streams on Streamr searching for correlations. To that end, each Satori node scans and ingest the history of open and paid data from the Streamr Network. Once the history of another stream is downloaded, the Satori engine analyses it in the context of the streams it’s trying to predict. If enough correlations are detected, the Satori node subscribes to the stream so it can use the new stream to make better predictions going forward.

Each Satori node uses the Streamr light client to facilitate requesting history, setting up and receiving updates on subscriptions, and publishing predictive data streams. Using the Streamr light client allows Satori nodes to be packaged as a normal executable, ready for download and easily installed, requiring little effort.

Is Satori scalable?

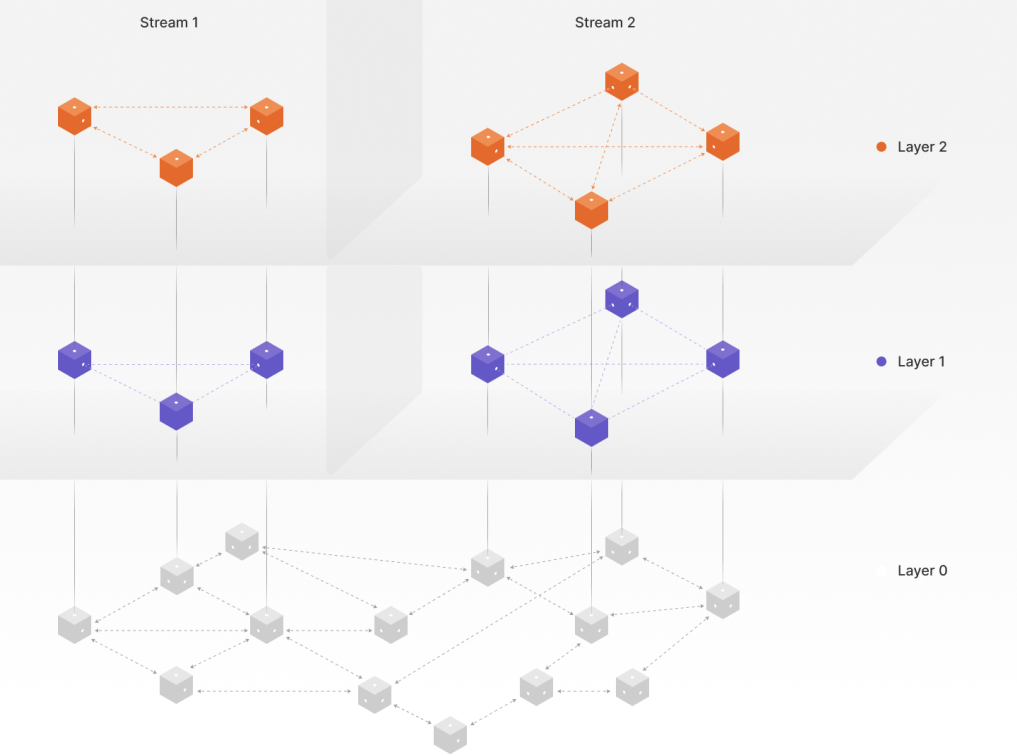

Prediction data streams are technically just streams of data and can be subscribed to and used as inputs to Satori predictive models. In other words, Satori nodes can leverage the work of other nodes by consuming their predictive streams. Since Satori nodes can leverage the work of other nodes, a certain level of hierarchy can be achieved and thus a certain level of scalable intelligence.

Prediction data streams can also be consumed as primary streams to be predicted. In this way, multiple steps into the future can be predicted. This prediction-of-prediction pattern will, of course, cease once the predictions produced are of no value (are not significantly better than chance).

How complicated is the automated machine learning engine?

The core of the Satori Node is an automated machine learning engine. It runs all the time, generating new models in an attempt to find one that better predicts the streams.

Since the engine is a module and is built in a modular fashion, parts or all of it can be replaced. This is beneficial for specific situations where a custom algorithm is more appropriate for the data. It also allows those with powerful hardware to utilize that hardware with complicated neural networks or any other ensemble of ML algorithms.

The default Satori engine should be sufficient for most situations, and is optimised to run on average hardware, needing no more than a typical CPU, some ram, and disk space. Those with very simple hardware, such as a home PC or Raspberry PI can use the algorithm that comes baked in by default.

Since the automated machine learning module constantly runs, looking for better and better models, it doesn’t need to be extremely complicated; it just needs to be able to build and evaluate models to find better ones over time.

Why generate predictions?

Prediction is immediately valuable, and laterally useful. For example, if you predict the future, you get anomaly detection for free. Prediction of the future is a fundamentally essential element of any intelligent system, including the brain itself. All parts of the neocortex are predicting the future of their inputs at all times. In this way, Satori is biologically inspired and represents the simplest useful distributed embodiment of this realisation.

How does Satori compare to other distributed AI projects?

Perhaps the default approach to distributed AI from a data scientist or academic perspective is to attempt to use the blockchain or some communication mechanism to share weights throughout the network. Essentially, to produce one large model spanning the entire network.

Another approach is to build a marketplace whereby various and disparate algorithms can combine their results together to form a larger intelligent function. This has been called a ‘society of mind’ approach. Unfortunately, the barrier to entry is quite high, those that are not data scientists or those who do not know how to build statistical or machine learning models are unable to participate.

A third approach employed by hedge funds is to use blockchain technology to crowdsource intelligence, again, from data scientists and those that can build ML models. This crowdsourced intelligence can then be shared or used to play the market.

The philosophy motivating Satori is that, firstly, each node must be autonomous and automatic, allowing for anyone to participate. Secondly, each Satori node must produce something of immediate, intrinsic value; a prediction of an underlying real world data stream which does not need to be interpreted or modelled to be used. Lastly, Satori nodes must make their predictions available for free in order to participate in the Satori network, therefore, knowledge of the future is widely available to all.

What’s the bottom line?

The principle guiding Satori’s design and development is that the simplest incarnation of distributed AI is the best way forward. Working with AI means dealing with complexity, and when dealing with complexity one must reduce it as soon as it arises. Satori is quite simple, yet has immediate real world utility.

Where can I go to learn more?

You can visit the Satori website for the project roadmap, GuitHub, or drop a message for Satori creator Jordan Miller in the Streamr Discord. There will also be an opportunity to get to know Satori better in an upcoming AMA as part of the building with Streamr YouTube series (data TBA).

Want to learn more about Streamr? Join the discussion and find your channel on Discord.