We are often asked which are the first use cases for a decentralized data stack like the one we envisage at Streamr. What we know is that the list of likely use cases is long, ranging from environmental monitoring, secure chat channels, pay-by-use metering, peer-to-peer package delivery, to physical asset tracking and other location-based services.

But whilst we like to think big, we also like to focus on things of the tangible sort. One use case which is close to our hearts is quantitative asset management and algorithmic trading in particular. In that spirit, we outline below the process for building and deploying a decentralized trading system using the Streamr network and Streamr Engine, and discuss ideas for building a profitable crypto algo.

This is a topic where we can only scratch the surface at one go, and this post is the first in a series. In subsequent write-ups we’ll get our hands dirty with historical crypto data and other relevant inputs, proceeding to explore, visualize, and quantify the data and the basic statistical properties and relationships, while showing how to go about building live algos for trading and investing in cryptocurrencies.

A nose for data

In the words of a certain English detective, “It is a capital mistake to theorize before one has data. Insensibly one begins to twist facts to suit theories, instead of theories to suit facts.” (Sir Arthur Conan Doyle: A Scandal in Bohemia, pg. 163).

Data is the necessary raw material which is needed in the development and testing of investment ideas, and clearly it is something you’ll need in the eventual live deployment of your systematic trading strategies. But unless you work for a large bank or a well-funded trading operation, it is a tedious and time-consuming task to find, download, clean, synchronize, and process financial data and any other kinds of relevant inputs.

This is what we want to change. As described in our whitepaper draft, Streamr stack includes a data market where any timestamped and cryptographically signed data is easy to find and easy to use, stored in a decentralized fashion, and transported securely via an incentivized peer-to-peer network from data publishers to data consumers.

But let’s make things concrete and look at some cryptocurrency data. We’ve just created an integration to CryptoCompare API so that BTH and ETH prices from a number of popular exchanges is now available as data streams on the Streamr platform (we’ll add more cryptocurrencies later).

For now, we simply make the crypto data available in an easy-to-use form. In the future, the Streamr data market will allow vendors, exchanges, and consolidators to offer their data securely, either for free or for a fee. The terms of use and the access rights are stored in the Ethereum blockchain.

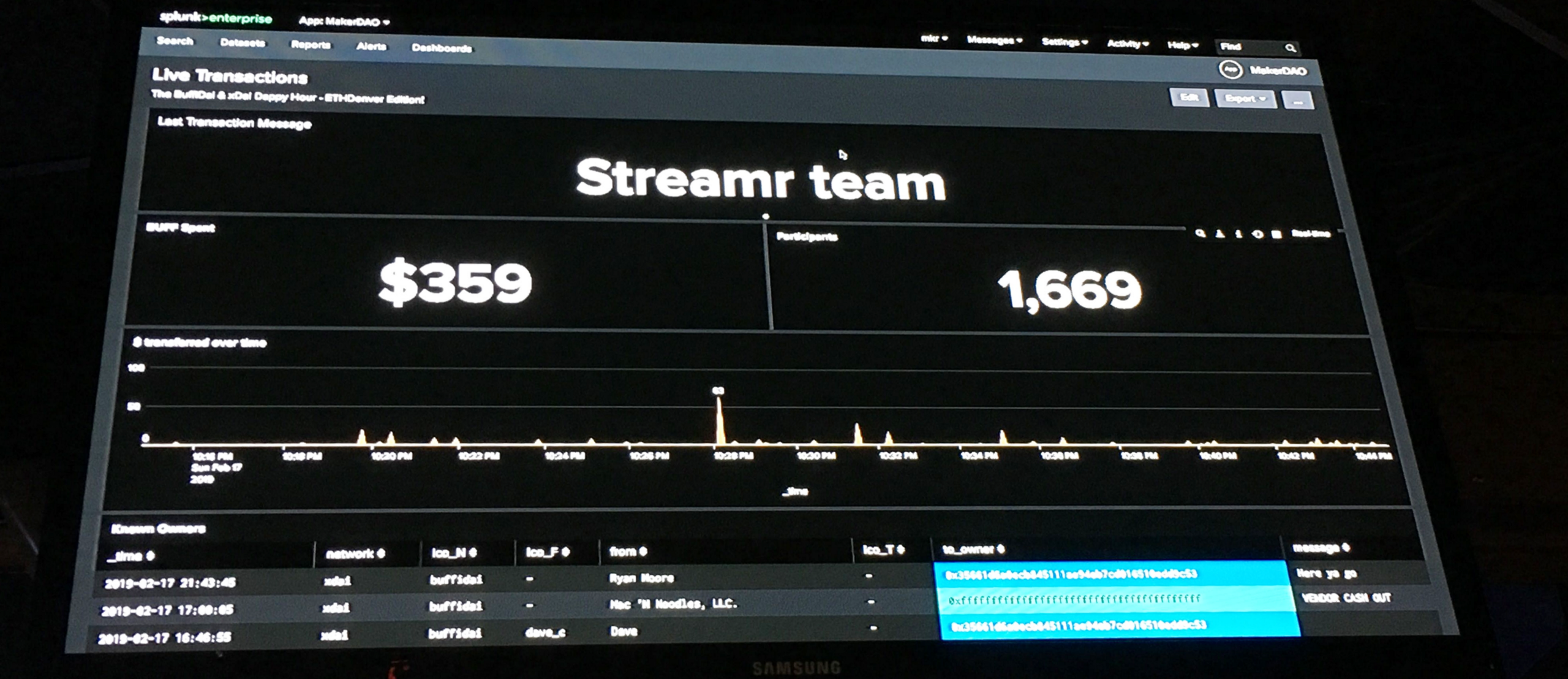

Here’s a live visualization of the Ether price (last trade) from the Kraken exchange. The data is persistent, so that you can easily look at the history and run a playback of the data and your trading decisions.

Event stream processing

The common denominator between algorithmic trading and virtually all of the things that Streamr is good for is the need to react to each new data point as soon as it becomes available. In the algo case, the app will process every incoming data point, be it either a new trade price, new bid or ask, or another piece of data which has some bearing on the asset price. On-the-fly processing involves filtering and smoothing the rolling time series with the goal of finding patterns which signal profitable trading opportunities.

There’s lots of data that is potentially relevant to financial trading. By 2020, it is estimated that there’ll be 44 zettabytes of data in existence (that, of course, is equal to 44 trillion gigabytes). And some 2.5 exabytes (2.5 quintillion bytes or 2.5 billion gigabytes) of new data is being generated every day.

Luckily you won’t need all of that data to get started. In algorithmic trading, the useful information ranges from official economic statistics (What’s the nonfarm employment number? Better or worse than expected?) to machine-readable news stories (what’s happenin’ in the Hormuz Strait?) and supply and demand factors (how’s the wheat crop coming along? Is the storm bearing in the direction of the oil rigs? How many cars are there this week in Walmart car parks?).

The competition is fierce in being able to process and make sense of the new data before any short-lived profit opportunity disappears. What you need is a calculating engine which is specialized in event stream processing. Batch processing is simply too slow, you’ve got to react to each new data point as and when it arrives. And what’s more, you need a stateful machine. Decisions depend on the history, and it will be too cumbersome to recalculate analytics on the basis of a potentially sizable and expanding data set every time something happens. What is required is the ability to update your decisions on the fly, always starting from the latest stored state.

Streamr Engine achieves all of this. A cloud-based version of the engine is already live, and the Streamr stack will incorporate a native analytics engine which allows for full decentralization. Because the data volumes in practical use cases are often huge, issues such as reliability, low latency, and scalability while ensuring data integrity are, however, not trivial. This is why we work together with Golem on the key parts of the technology stack, aiming to decentralize event stream processing when and where it makes sense.

Ðapps made easy

Ok, all this sounds kind of cool, but how do I go about actually building a decentralized trading app? I probably need to start learning streaming SQL, or at least figure out how to write and map lambda functions to event streams?

Not so. We had the same problem years ago when we developed Streamr 0.1 for our own high-frequency statarb operation in the proverbial garage. In the team there was the deep tech knowledge, and there was the intuitive market knowledge, but never did the two meet in the same head. After banging those various heads together for a while a solution emerged: Let’s hide the complexity under the hood and expose it in a visual programming interface!

Wenceslas Hollar — Two deformed heads with long lips: State 2 (17th century)

Thus were sown the seeds for the Streamr Editor. A centralized beta version of the graphical UI is already operational on the cloud-based platform, and the same and more will be implemented as the usability layer in the decentralized Streamr stack. The editor gives you easy access to data streams, you can route the incoming data through analytical and decision making modules, and interact with the external world (i.e. buy or sell when the moment is right).

As a philosophical point, we believe in openness and transparency. You can use as much or as little of the Streamr stack as you need, and you can have many kinds of third-party software clients that subscribe to data streams and exploit the data. For instance, it is perfectly conceivable that someone will develop a specialised user interface just for researching, backtesting, and executing algo trading strategies. Or you might want to do the statistical analysis and backtesting in Matlab or Python, perhaps synchronizing and feeding in the history from the Streamr data market. There’s a number of integrations to other tools and platforms which will be needed. If you’re up to the task, we’ll work with you.

Decentralized trading machines

Computers already do most of the trading in financial instruments. Most of those machines are controlled by hedge funds, large banks, and proprietary trading operations. But how will trading take place in a decentralized world? Think of a large peer-to-peer network of computing nodes which immediately react to incoming data, process the event stream, extract signals from the noise, and take care of your trading account or pension account automatically. Decentralization is the key to making this process resilient and robust, bringing down the data and infrastructure cost and greatly reducing the development time and effort.

We also see an ecosystem taking shape. The Melon portal is a wonderful example of an enabler platform which allows for different modules to handle the many moving parts of the asset management process. And there will be others who step in, hook up to Melon endpoints, make use of Streamr stack, and connect with each other in a growing network. There will be those who clean and refine the data, those who develop trading signals and valuation metrics, and others who provide risk control services, portfolio construction tools, etc. Some people — or machines or robots — trade for themselves, but there will always be those provide asset management services for others. What we want to do is to make life easier and more secure for everyone in various ways.

As a final note, there’s a popular line of thought that cryptocurrencies are all about speculation and get-rich-quick schemes. Some may be so, but Sha Wang and Jean-Philippe Vergne make a fundamentally important point in their paper on “Buzz Factor or Innovation Potential: What Explains Cryptocurrencies’ Returns?” that it is actually the innovation potential which drives cryptocurrencies.

The argument that the emergence of programmable cryptocurrencies is a fundamental game changer makes very good sense to us. And even if you don’t agree, the research by Ms. Wang and Dr. Vergne suggests some pretty interesting price indicators having to do with the community activity and the evolution of the code base. In the next post we will go through a number of factors which in our view are valuable as drivers of crypto returns.

Stay tuned for the next post in the series. And please join our Slack to stay up to date with Streamr.