The Streamr team made a herculean effort over March to release the Data Unions Framework upgrade, which is now live on xDai. While the launch had our focus, it didn’t stop the grassroots ecosystem developments happening at the network layer. Here are the highlights from the last two months:

- Data Unions 2.0 launched on xDai

- DATA tokens bridged to xDai

- New DATA/xDAI pool on Honeyswap

- Helium <> Streamr Network integration

- IFTTT <> Streamr Network integration

- Grafana <> Streamr Network integration

- Vidhara wins the India Data Challenge hackathon

- Streamr JavaScript client version 5 released

- New major Java client released

Table of Contents

Data Unions 2.0

Almost everything under the hood has been rebuilt in this iteration of the framework. Arguably the biggest change is that the Data Union treasuries, which hold yet to be allocated funds on each Data Union smart contract, are now located on xDai, rather than the Ethereum mainnet. This means that member earnings are no longer held hostage on the mainnet, since withdrawing to the sidechain costs fractions of a cent.

We couldn’t have brought Data Unions to xDai without also bringing the DATA token along with it. From here on, DATA is now a multichain asset. You can use the xDai Omnibridge to bridge your mainnet DATA tokens to xDai, or you can get DATA on Honeyswap via a direct fiat injection into the xDai ecosystem with Ramp. We established a DATA/xDai pool and added a large amount of seed liquidity to provide a good experience on the platform.

Bringing Data Unions directly on-chain will lead to more composability with DeFi legos, like API3 and various AMMs. A bridge between Data Unions and Binance is also in development – this will allow for fast and cheap withdrawals directly to your Binance deposit address.

Swash is currently working on its migration to 2.0 and there are new Data Unions in the works that will grow up very quickly!

Stream Registry

Currently the registry of stream metadata and permissions are stored in a centralized database, which needs to be queried by network users for message validation. Our objective is to store this registry on-chain for better security, as well as to enable anyone to read its contents in a decentralized way. This project is led by our new hire, Sam Pitter. Sam is a senior smart contract developer based out of Germany. He’ll be working exclusively on this task and has already made great progress. The Stream registry will be created and managed initially on the xDai chain for its low fees.

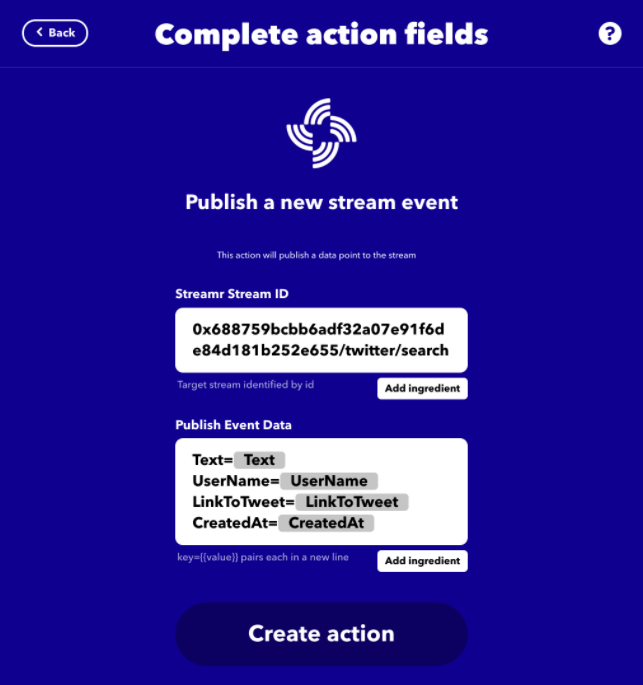

If-This-Then Streamr

Streamr now has an If-This-Then-That integration! You can now stream data from 600+ brands, services and IoT devices, available on IFTTT to Streamr – just mix data ingredients to build and push stream data points. This means that real-time data on Streamr can act as a data input trigger to any other API on the platform to generate powerful logic and feedback loops.

For example, you could connect Spotify and Streamr together with drag and drop tools – every time you play a song, the song name would be pushed into a stream. It works the other way around too. Every time a new data point arrives on a stream, you could write it to a Google sheet. Or if you have a stream of photo URLs, they could be published to a photo hosting service, in real-time. The possibilities are endless. Here is a guide to help you get started.

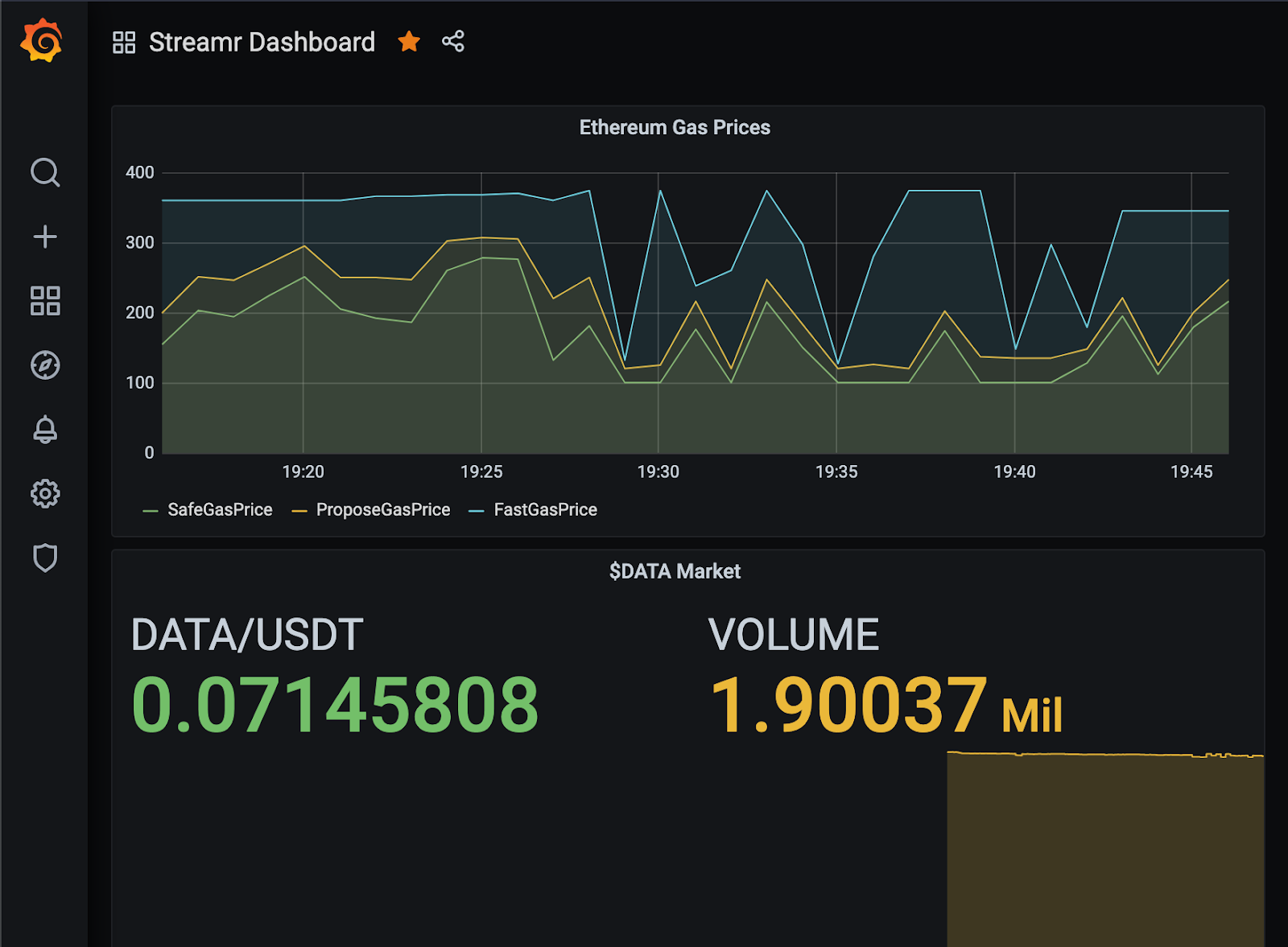

Streamr Dashboards go Grafana

Streamr is now an official community data source of Grafana.

Check out the Streamr plugin on Grafana, and if you’re new to Grafana, here is a great getting started guide to get your streams visualised.

Token migration

We are reaching out to exchanges, wallets and aggregators for their support in the upcoming token migration, along with preparing the support documentation for users. We will be building a token migration tool and expect exchanges to support the migration natively. We are also researching the different token standards, especially the pros and cons of adopting the ERC777 standard (completely backwards compatible with ERC20).

Tokenomics and the Streamr Network

Measuring the performance and health of the network has been the focus of the network team as of late and especially now that the Network Explorer allows us to inspect how topologies are formed in a much more visual way. We’ve also exposed latency metrics per stream topology to better understand the emergent performance of the network at the lowest levels.

Network throughput is being looked at quite closely, specifically the tradeoffs between WebRTC and websockets. WebRTC is a complex technology that behaves differently to our websocket implementation under heavy loads. We’re tinkering at the very heart of the WebRTC protocol and pushing it to its limits, we look forward to sharing the results of our progress as we get closer to the Brubeck milestone.

Our tokenomics research is in Phase 3, which has so far included work on formally defining an “MVP” of the Agreement (which is the thing that connects Payers to Brokers, i.e. connects demand and supply in the network). So in Phase 3 we’re starting to get into the really important bits, which is very exciting. Next, the initial Agreement model will be implemented in cadCAD, and then we’ll be able to simulate the behavior and earnings of Brokers (node operators) under various assumptions about how supply and demand enters the system, and for example how the initial growth of the network can be accelerated via potential use of additional reward tokens (a bit like yield farming in DeFi).

The full tokenomics isn’t planned until the Tatum milestone which is due next year, however this year we could already have additional token incentives to help kickstart the decentralization of the network once we reach the Brubeck milestone.

Streamr Clients

We’ve pushed a major version update to the client – version 5 is now released. The update contains over 500 commits and brings compatibility with Data Unions 2.0. Only version 5 and up will be compatible with Data Unions from here on. The Java client has also been updated to be compatible with Data Unions 2.0.

This update is also about laying the foundations for moving logic from the client layer into the broker layer. This decoupling will mean that the JS client will import the Network. This means that clients will be ‘light’ in that the heavy logic, such as message ordering and gap filling, will be handled once – in the imported Network package. When this architecture is achieved we will have taken another big step towards the Brubeck milestone.

Deprecations and Breaking Changes

A number of API endpoints need to be retired and replaced to be compatible with our vision of decentralization. This section summarises deprecated features and upcoming breaking changes. Items marked ‘Date TBD’ will be happening in the medium term, but a date has not yet been set.

- On May 31st 2021, the API endpoint to create a stream – /streams POST will no longer automatically generate an ID if it is not supplied in the request. This means that a valid stream ID changes from optional to required. The Streamr clients will be updated in April with this breaking change.

- On April 31st 2021, the Canvas and Dashboard features will be removed from the Streamr Core application and the associated API. This was decided by the DATA token holders through a governance vote. You will still be able to create and manage streams, data products, and Data Unions as usual after this change. If you don’t currently use the Canvas or Dashboard features of the Core application, the change won’t affect you and you won’t notice any difference.

The code will be archived into a fork for safekeeping and potential later use. An example of later use could be to relaunch the Canvas tooling at a later time as a self-hosted version which would connect to the decentralized Streamr Network for data.

This notice period gives you time to migrate any of your Canvas-based stream processing workloads to other tools. We in the Streamr team are using a few Canvases ourselves for computing metrics, such as the real-time messages/second metric you see on the project website. It’s pretty straightforward to replace those Canvases with simple node.js scripts that compute the same results and leverage the Streamr JS library, and this is exactly what we intend to do for the few Canvas-based workloads we have internally.

(Date TBD): Support for unsigned data will be dropped

Unsigned data on the network is not compatible with the goal of decentralization, because malicious nodes can tamper with data that is not signed. As the Streamr Network will be ready to start decentralizing at the next major milestone (Brubeck), support for unsigned data will be ceased as part of the progress towards that milestone. Users should upgrade old client library versions to newer versions that support data signing, and use Ethereum key-based authentication.