The whole team is thrilled with the high demand we have seen to run a Streamr Node and with the overall performance we were able to observe during the three Brubeck Testnets! We are now more than excited to present to you a first glimpse of the Testnet results.

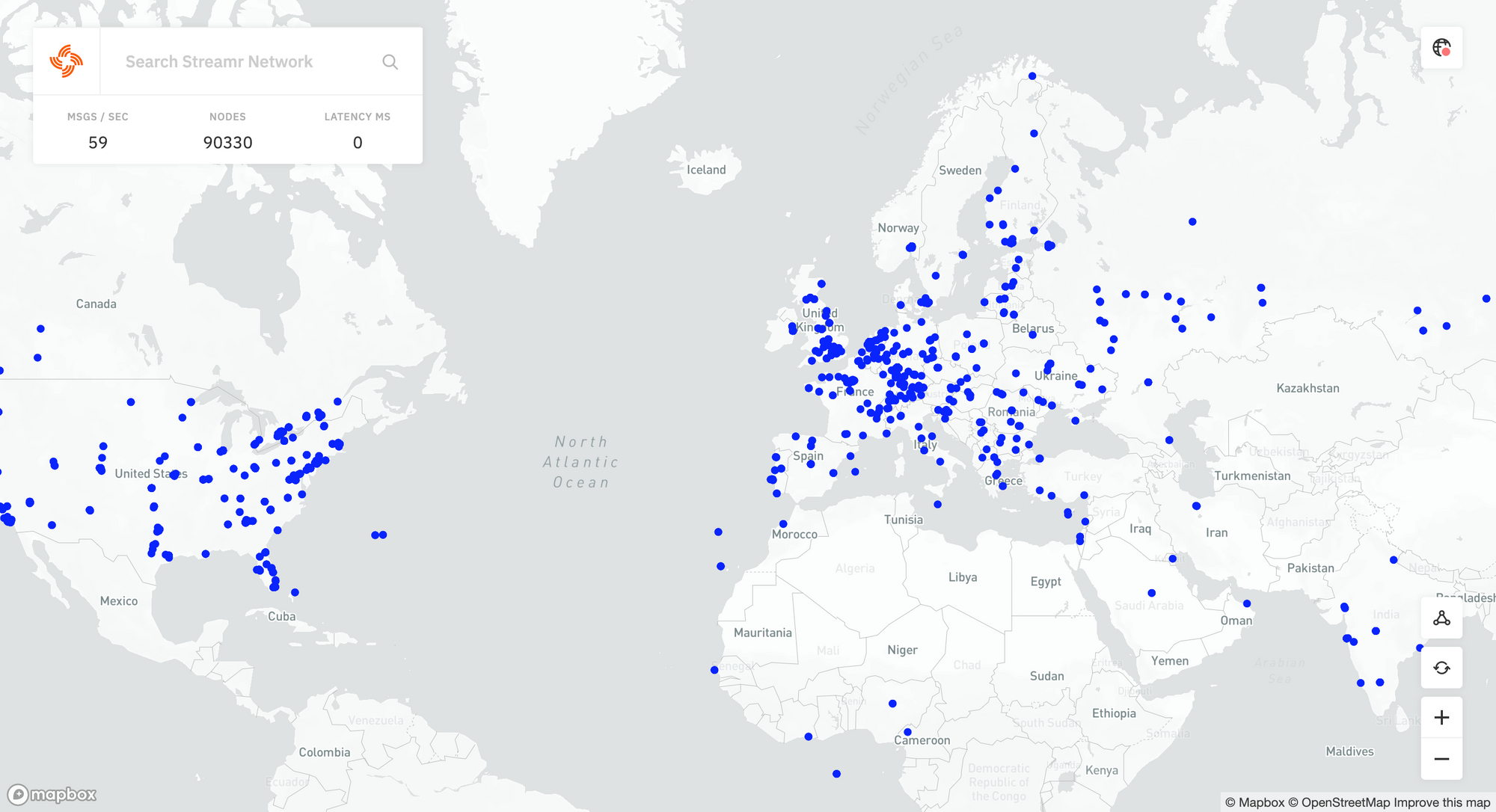

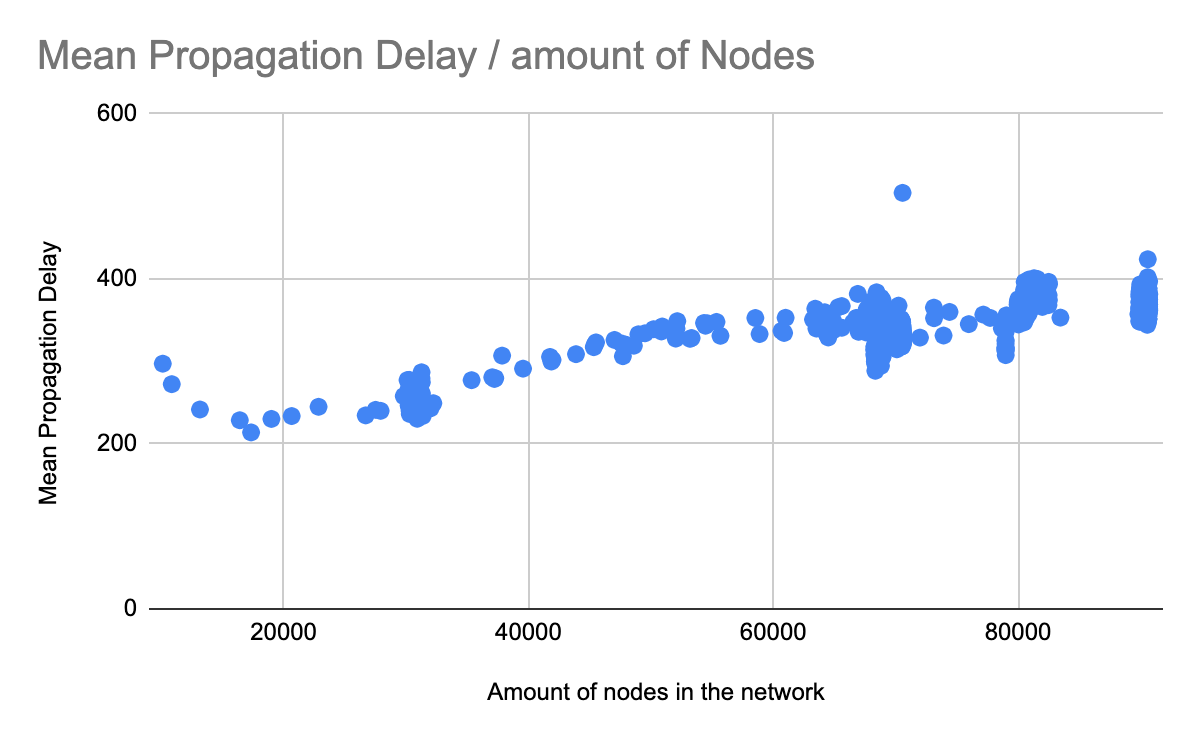

Let’s start with the most obvious win. The size of the third Testnet was massive! At its peak, we were able to see over 90,000 nodes participating from more than 85 different countries. And despite the size, node runners were able to broadcast messages to all of the nodes in about 350 milliseconds on average. This confirms our hypothesis on the Streamr Network’s scalability, but more on that later.

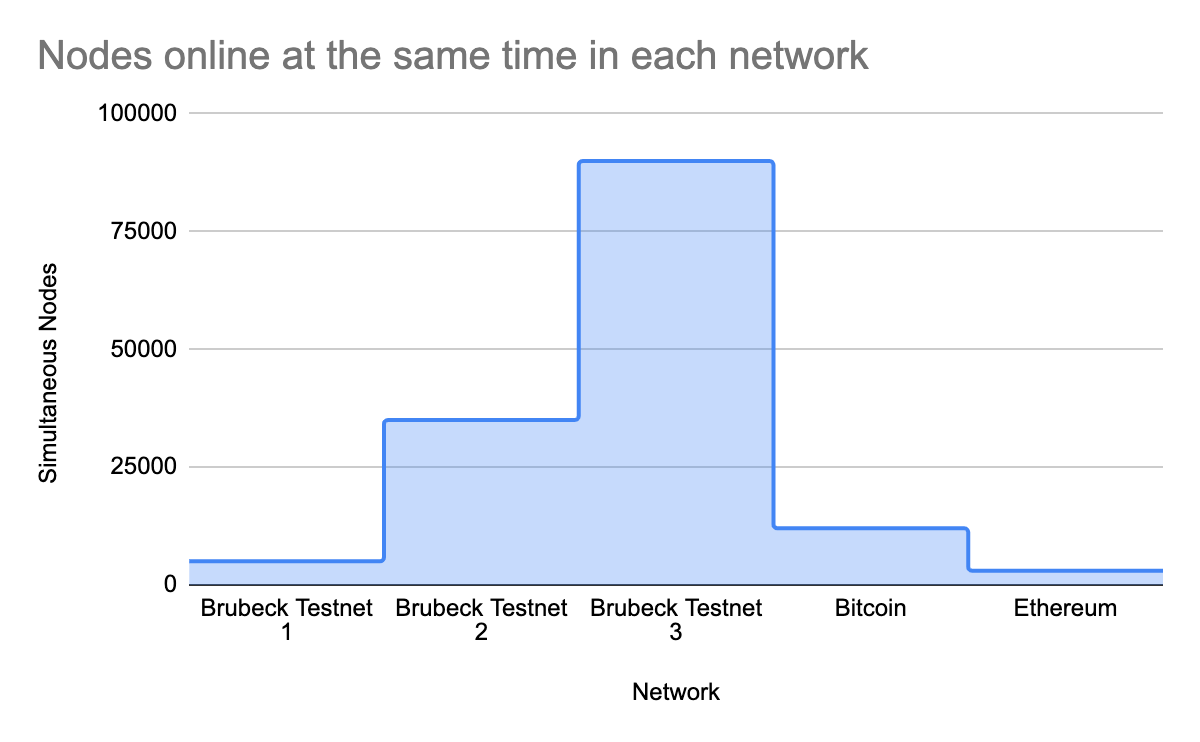

Let’s take a closer look at the number of node runners we had in the Network. With 130,000 nodes in total, the Streamr Testnet formed one of the biggest P2P networks in computing history. The sheer size of the Streamr Network dwarfs known blockchain networks such as Ethereum or Bitcoin, which have around 3,000 nodes and 12,000 nodes respectively. Obviously, the Streamr Broker node comparison to Ethereum or Bitcoin here is like comparing apples to oranges, since the Streamr Network is not a blockchain, but it gives a good idea about how big the test network actually was.

We incentivised node runners to join by mining rewards in return for their idle bandwidth. In total, 2,000,000 DATA tokens are to be airdropped to around 130,000 individual wallets on an Ethereum side chain. More details on this will follow soon. These two leaderboards, here and here, builty by Streamr community devs give a good overview on the rewards distribution. In addition, NFTs were recently dropped to special contributors of the testnets and the most active community members. Check them out here!

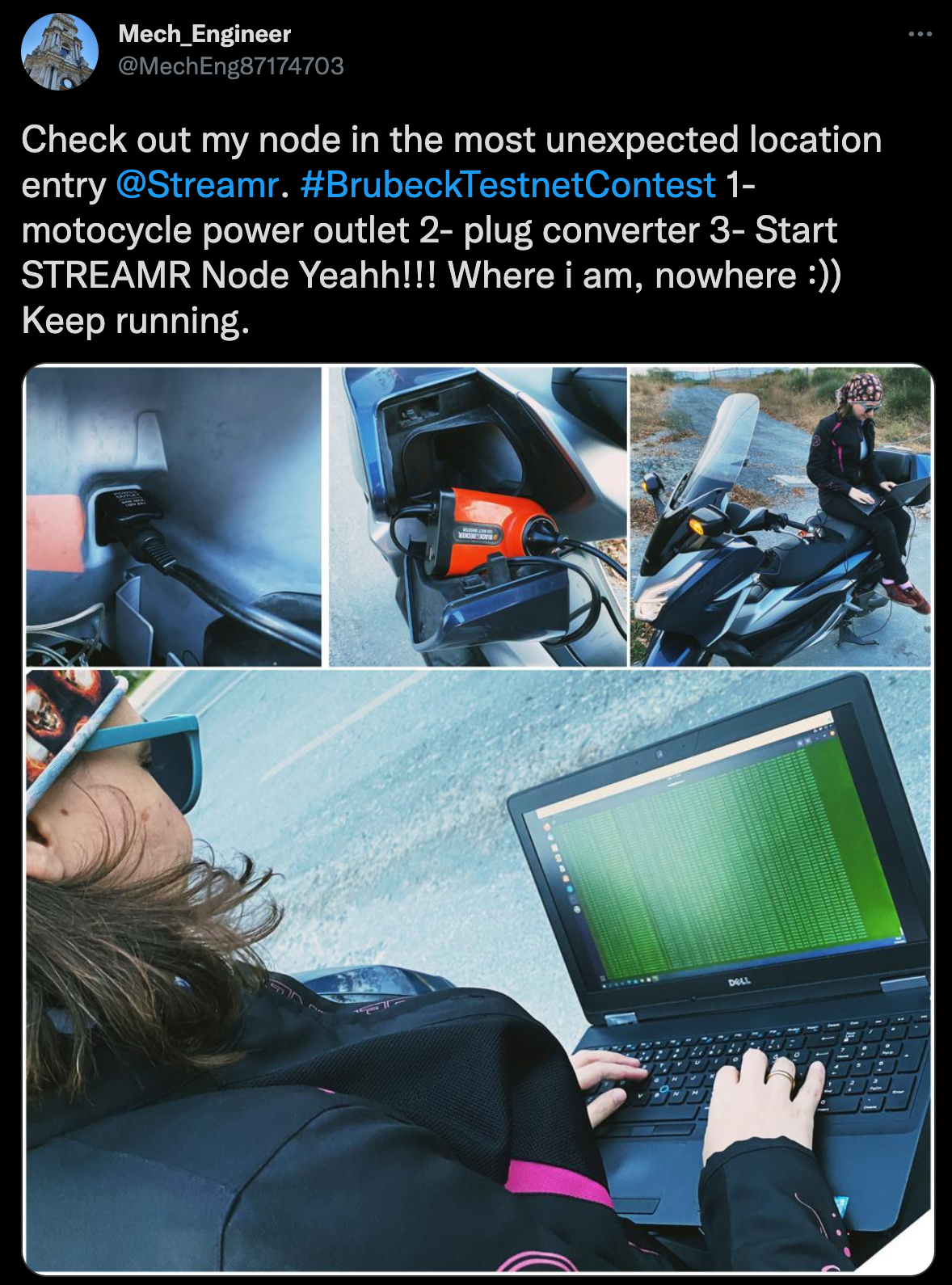

Another driver that brought in node runners was the simple setup. A Streamr Broker node is super easy to run, it can be done with modest computer skills either by installing the required software and dependencies from NPM or by using one of the Streamr Docker images. And it turns out that people were running the nodes in very different setups. On Twitter, the community shared their node setups, including a Raspberry Pi getting power from a solar panel, or an old laptop powered by electricity taken from a motorbike. There were also tablets, phones and of course plenty of different server and cloud setups in Linux, Android, Raspberry Pi OS, macOS and Windows.

So what did the team find out during the Testnets? The first sign that the Brubeck Testnet was going to be so popular came from the centralised components that were still part of the Testnet. The team was prepared to horizontally scale the operation and with small changes we got from 5,000 nodes to 90,000 nodes. There really is no limit on the number of nodes the Network can actually have―so in the future we will hopefully be able to see an even bigger Streamr Network.

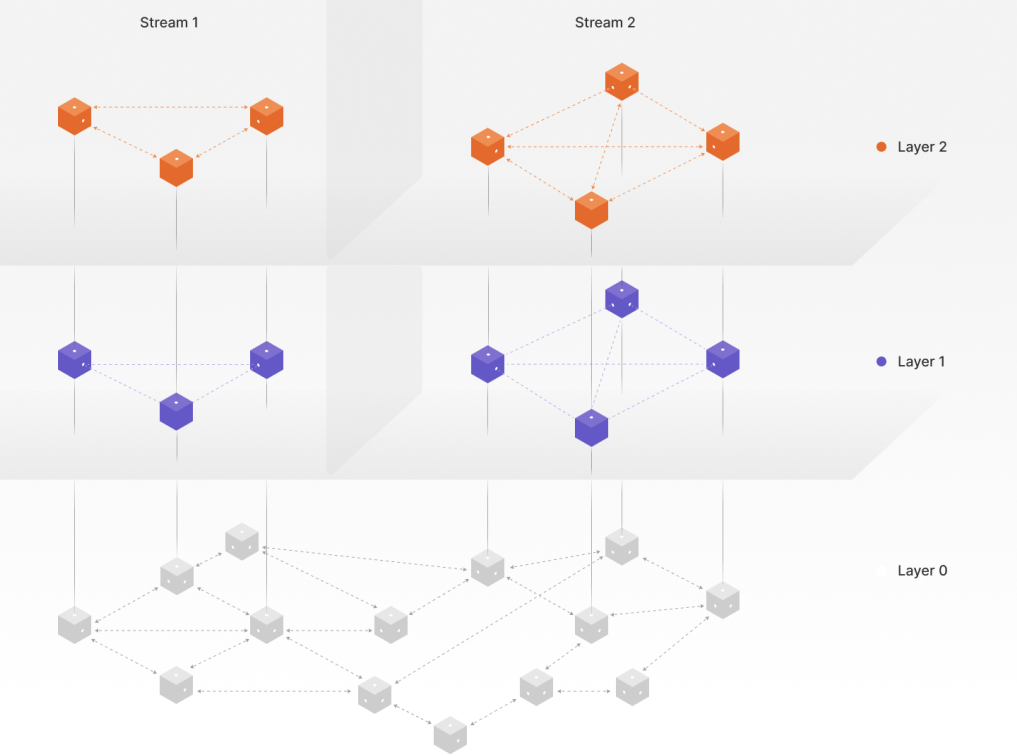

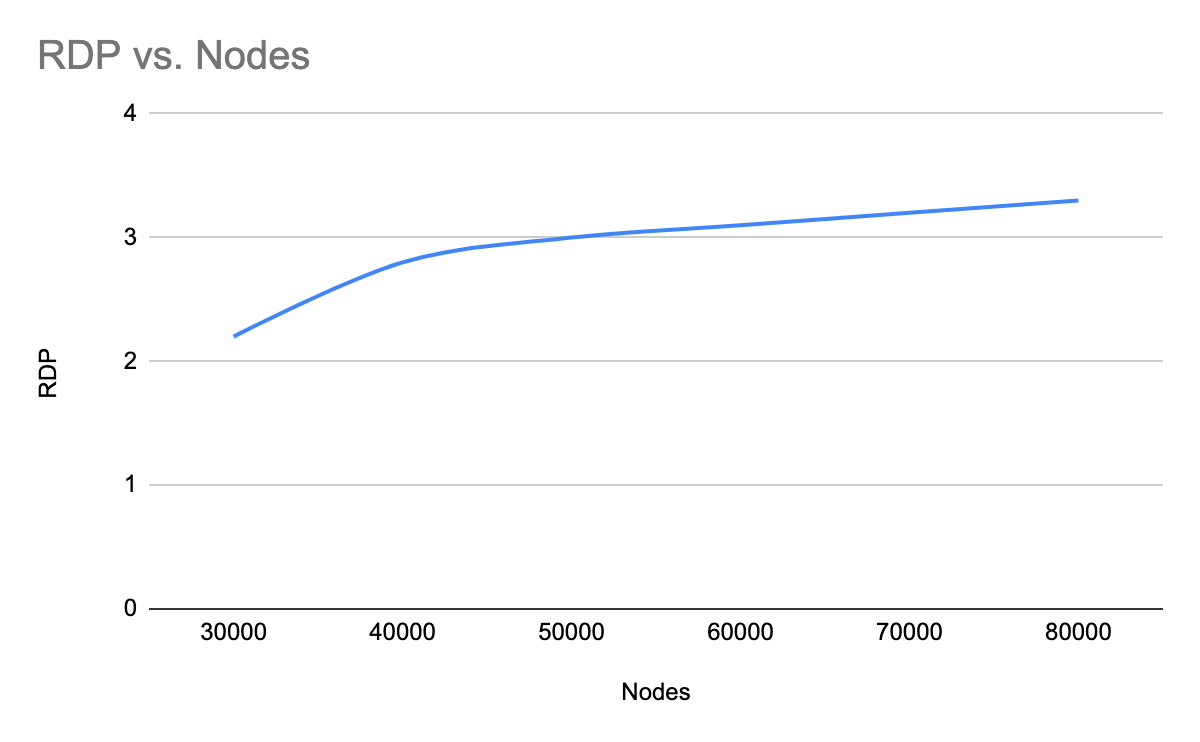

We were also able to confirm some of the earlier results from our research and simulations when it comes to the observed latency as a function of the number of nodes. We have a metric called ‘Relative Delay Penalty’ (RDP) which can be used to illustrate that the message propagation delay grows very little when the Network’s size is growing:

Why is this important? Imagine that you want to talk to 90,000 people at the same time. In traditional networking, this would mean that you need to be able to contact each person and tell them individually what you wanted to say. In the decentralized web3 and Streamr stack, you just publish your message and in 350 milliseconds everyone has it everywhere in the world.

Streamr offers scalability out-of-the-box. A centralised system in the cloud would have to “solve” scalability as a separate problem, whereas scalability is built into Streamr. This means going forward the Streamr Network is well suited to run much more than 90,000 nodes. In the Network Whitepaper, the team measured networks of up to 2,000 nodes, whereas the Testnet now ran 90,000 nodes, and there is barely a difference in the observed latency.

The Nodes joining the Network do not need to know or trust each other. 90,000 anonymous nodes connected to and running even through NAT and firewalls with no configuration – an advantage of using WebRTC and a feature that is not possible with a centralised solution.

Coming up next

We are gearing up for the Brubeck Mainnet launch and are busy with preparing the airdrop for all 130,000 individual addresses that were connected to the Testnets. More updates on this will follow soon. In the meantime you can see the full rewards distribution here or use this link and your ETH address:

Next year we will be working on our last milestone―Tatum. This milestone will see the introduction of token economics, staking mechanisms and bounties, and the ability for node runners to mine. We hope you are as excited as we are for what’s to come!

We’ll also follow up in the coming weeks with an in-depth, technical analysis of the testnets.